In a move that marks the end of the "GPU-only" era for the world’s leading artificial intelligence lab, OpenAI has officially transitioned into a vertically integrated hardware powerhouse. As of early 2026, the company has solidified its custom silicon strategy, moving beyond its role as a software developer to become a major player in semiconductor design. By forging deep strategic alliances with Broadcom (NASDAQ: AVGO) and TSMC (NYSE: TSM), OpenAI is now deploying its first generation of in-house AI inference chips, a move designed to shatter its near-total dependency on NVIDIA (NASDAQ: NVDA) and fundamentally rewrite the economics of large-scale AI.

This shift represents a massive gamble on "Silicon Sovereignty"—the idea that to achieve Artificial General Intelligence (AGI), a company must control the entire stack, from the foundational code to the very transistors that execute it. The immediate significance of this development cannot be overstated: by bypassing the "NVIDIA tax" and designing chips tailored specifically for its proprietary Transformer architectures, OpenAI aims to reduce its compute costs by as much as 50%. This cost reduction is essential for the commercial viability of its increasingly complex "reasoning" models, which require significantly more compute per query than previous generations.

The Architecture of "Project Titan": Inside OpenAI’s First ASIC

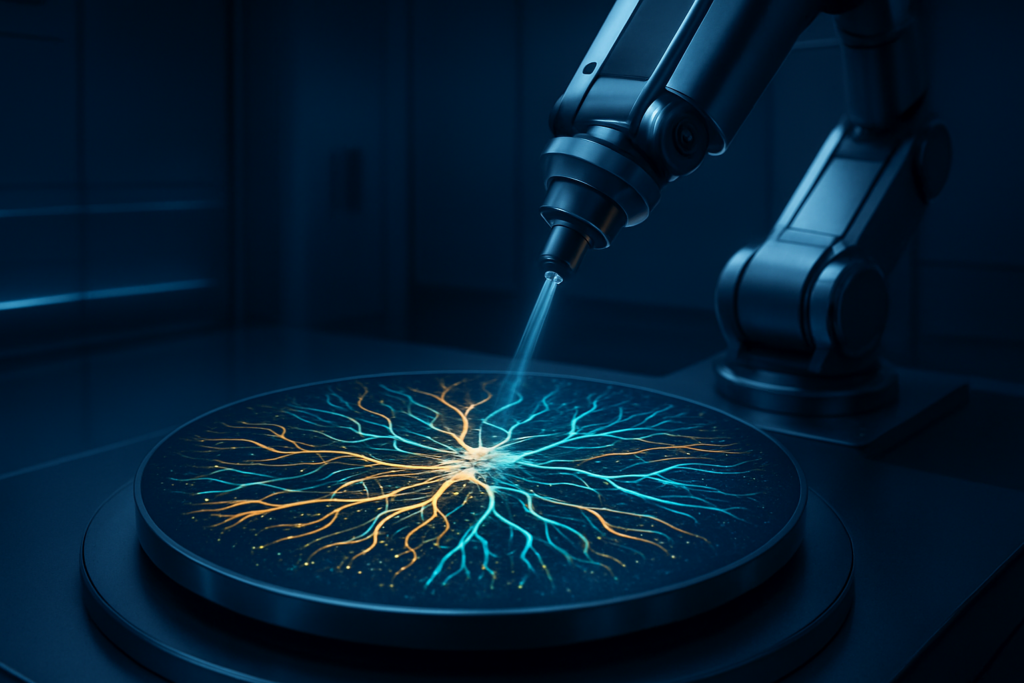

At the heart of OpenAI’s hardware push is a custom Application-Specific Integrated Circuit (ASIC) often referred to internally as "Project Titan." Unlike the general-purpose H100 or Blackwell GPUs from NVIDIA, which are designed to handle a wide variety of tasks from gaming to scientific simulation, OpenAI’s chip is a specialized "XPU" optimized almost exclusively for inference—the process of running a pre-trained model to generate responses. Led by Richard Ho, the former lead of the Google (NASDAQ: GOOGL) TPU program, the engineering team has utilized a systolic array design. This architecture allows data to flow through a grid of processing elements in a highly efficient pipeline, minimizing the energy-intensive data movement that plagues traditional chip designs.

Technical specifications for the 2026 rollout are formidable. The first generation of chips, manufactured on TSMC’s 3nm (N3) process, incorporates High Bandwidth Memory (HBM3E) to handle the massive parameter counts of the GPT-5 and o1-series models. However, OpenAI has already secured capacity for TSMC’s upcoming A16 (1.6nm) node, which is expected to integrate HBM4 and deliver a 20% increase in power efficiency. Furthermore, OpenAI has opted for an "Ethernet-first" networking strategy, utilizing Broadcom’s Tomahawk switches and optical interconnects. This allows OpenAI to scale its custom silicon across massive clusters without the proprietary lock-in of NVIDIA’s InfiniBand or NVLink technologies.

The development process itself was a landmark for AI-assisted engineering. OpenAI reportedly used its own "reasoning" models to optimize the physical layout of the chip, achieving area reductions and thermal efficiencies that human engineers alone might have taken months to perfect. This "AI-designing-AI" feedback loop has allowed OpenAI to move from initial concept to a "taped-out" design in record time, surprising many industry veterans who expected the company to spend years in the R&D phase.

Reshaping the Semiconductor Power Dynamics

The market implications of OpenAI’s silicon strategy have sent shockwaves through the tech sector. While NVIDIA remains the undisputed king of AI training, OpenAI’s move to in-house inference chips has begun to erode NVIDIA’s dominance in the high-margin inference market. Analysts estimate that by late 2025, inference accounted for over 60% of total AI compute spending, and OpenAI’s transition could represent billions in lost revenue for NVIDIA over the coming years. Despite this, NVIDIA continues to thrive on the back of its Blackwell and upcoming Rubin architectures, though its once-impenetrable "CUDA moat" is showing signs of stress as OpenAI shifts its software to the hardware-agnostic Triton framework.

The clear winners in this new paradigm are Broadcom and TSMC. Broadcom has effectively become the "foundry for the fabless," providing the essential intellectual property and design platforms that allow companies like OpenAI and Meta (NASDAQ: META) to build custom silicon without owning a single factory. For TSMC, the partnership reinforces its position as the indispensable foundation of the global economy; with its 3nm and 2nm nodes fully booked through 2027, the Taiwanese giant has implemented price hikes that reflect its immense leverage over the AI industry.

This move also places OpenAI in direct competition with the "hyperscalers"—Google, Amazon (NASDAQ: AMZN), and Microsoft (NASDAQ: MSFT)—all of whom have their own custom silicon programs (TPU, Trainium, and Maia, respectively). However, OpenAI’s strategy differs in its exclusivity. While Amazon and Google rent their chips to third parties via the cloud, OpenAI’s silicon is a "closed-loop" system. It is designed specifically to make running the world’s most advanced AI models economically viable for OpenAI itself, providing a competitive edge in the "Token Economics War" where the company with the lowest marginal cost of intelligence wins.

The "Silicon Sovereignty" Trend and the End of the Monopoly

OpenAI’s foray into hardware fits into a broader global trend of "Silicon Sovereignty." In an era where AI compute is viewed as a strategic resource on par with oil or electricity, relying on a single vendor for hardware is increasingly seen as a catastrophic business risk. By designing its own chips, OpenAI is insulating itself from supply chain shocks, geopolitical tensions, and the pricing whims of a monopoly provider. This is a significant milestone in AI history, echoing the moment when early tech giants like IBM (NYSE: IBM) or Apple (NASDAQ: AAPL) realized that to truly innovate in software, they had to master the hardware beneath it.

However, this transition is not without its concerns. The sheer scale of OpenAI’s ambitions—exemplified by the rumored $500 billion "Stargate" supercomputer project—has raised questions about energy consumption and environmental impact. OpenAI’s roadmap targets a staggering 10 GW to 33 GW of compute capacity by 2029, a figure that would require the equivalent of multiple nuclear power plants to sustain. Critics argue that the race for silicon sovereignty is accelerating an unsustainable energy arms race, even if the custom chips themselves are more efficient than the general-purpose GPUs they replace.

Furthermore, the "Great Decoupling" from NVIDIA’s CUDA platform marks a shift toward a more fragmented software ecosystem. While OpenAI’s Triton language makes it easier to run models on various hardware, the industry is moving away from a unified standard. This could lead to a world where AI development is siloed within the hardware ecosystems of a few dominant players, potentially stifling the open-source community and smaller startups that cannot afford to design their own silicon.

The Road to Stargate and Beyond

Looking ahead, the next 24 months will be critical as OpenAI scales its "Project Titan" chips from initial pilot racks to full-scale data center deployment. The long-term goal is the integration of these chips into "Stargate," the massive AI supercomputer being developed in partnership with Microsoft. If successful, Stargate will be the largest concentrated collection of compute power in human history, providing the "compute-dense" environment necessary for the next leap in AI: models that can reason, plan, and verify their own outputs in real-time.

Future iterations of OpenAI’s silicon are expected to lean even more heavily into "low-precision" computing. Experts predict that by 2027, OpenAI will be using FP4 or even INT8 precision for its most advanced reasoning tasks, allowing for even higher throughput and lower power consumption. The challenge remains the integration of these chips with emerging memory technologies like HBM4, which will be necessary to keep up with the exponential growth in model parameters.

Experts also predict that OpenAI may eventually expand its silicon strategy to include "edge" devices. While the current focus is on massive data centers, the ability to run high-quality inference on local hardware—such as AI-integrated laptops or specialized robotics—could be the next frontier. As OpenAI continues to hire aggressively from the silicon teams of Apple, Google, and Intel (NASDAQ: INTC), the boundary between an AI research lab and a semiconductor powerhouse will continue to blur.

A New Chapter in the AI Era

OpenAI’s transition to custom silicon is a definitive moment in the evolution of the technology industry. It signals that the era of "AI as a Service" is maturing into an era of "AI as Infrastructure." By taking control of its hardware destiny, OpenAI is not just trying to save money; it is building the foundation for a future where high-level intelligence is a ubiquitous and inexpensive utility. The partnership with Broadcom and TSMC has provided the technical scaffolding for this transition, but the ultimate success will depend on OpenAI's ability to execute at a scale that few companies have ever attempted.

The key takeaways are clear: the "NVIDIA monopoly" is being challenged not by another chipmaker, but by NVIDIA’s own largest customers. The "Silicon Sovereignty" movement is now the dominant strategy for the world’s most powerful AI labs, and the "Great Decoupling" from proprietary hardware stacks is well underway. As we move deeper into 2026, the industry will be watching closely to see if OpenAI’s custom silicon can deliver on its promise of 50% lower costs and 100% independence.

In the coming months, the focus will shift to the first performance benchmarks of "Project Titan" in production environments. If these chips can match or exceed the performance of NVIDIA’s Blackwell in real-world inference tasks, it will mark the beginning of a new chapter in AI history—one where the intelligence of the model is inseparable from the silicon it was born to run on.

This content is intended for informational purposes only and represents analysis of current AI developments.

TokenRing AI delivers enterprise-grade solutions for multi-agent AI workflow orchestration, AI-powered development tools, and seamless remote collaboration platforms.

For more information, visit https://www.tokenring.ai/.